In Prompt Engineering: ChatGPT 5's Optimizer to incorporate in your workflow

Reference: https://www.promptingguide.ai/models/chatgpt

ChatGPT5 has more capabilities and is more complex so needs a strong, well structured and well-engineered prompt as the input to utilize its potential without vagueness causing a risk of misinterpretation - inconsistency through variability in outputs and creativity it can very well guess what your intention is, but as with any vagueness in description it can also guess wrongly so will need guard rails and controls over the communication to align with how the system works.

For the prompt optimizer the goal is to flesh out the controls, context, scope, explicit instructions, inconsistency handling, providing the patterns and structure to generate the best outcome. The outcome which you are able to accept at work is a tool that rewrites your prompt so ChatGPT (or another AI) gives better results → restructure prompts for clarity, detail, and efficiency. OpenAI's built-in Prompt Optimizer is available within the OpenAI Playground, specifically tailored for the GPT-5 models.

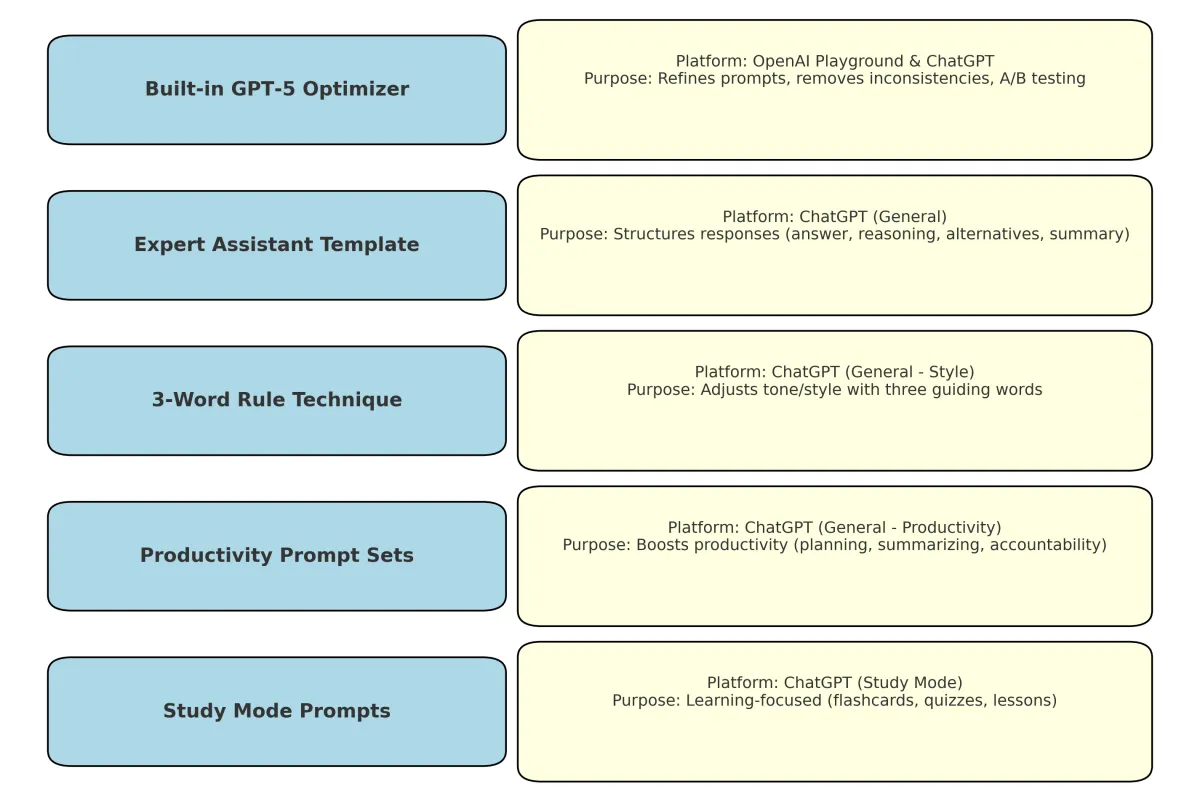

1. OpenAI’s Built-In GPT-5 Prompt Optimizer

Platform: OpenAI Playground & ChatGPT interface

Purpose: Automatically rewrites prompts for clarity, structure, and higher performance.

Details:

Fixes ambiguity, contradictions, unclear formatting.

Boosts accuracy, brevity, creativity, speed, or safety.

Allows A/B testing and reusable Prompt Objects.

2. Community and Structured Prompt Templates

a) Expert Assistant Prompt Template

Platform: ChatGPT (general)

Purpose: Structures output into:

Direct answer

Reasoning breakdown

Alternative suggestions

Short summary/next steps

b) 3-Word Rule Technique

Platform: ChatGPT (general)

Purpose: Refines style/tone by appending three role-defining words, e.g. 'like a teacher', 'like a coach'.

Helps personalize and humanize responses.

c) Productivity Prompt Sets

Platform: ChatGPT (general)

Purpose: Improves productivity tasks such as:

Drafting emails

Summarizing text

Accountability workflows

Planning and task management

d) Study Mode Prompts

Platform: ChatGPT (Study Mode)

Purpose: Optimized educational prompts such as:

Flashcards

Micro-lessons

Quizzes

Personalized learning guidance

Reference Links

3-Word Rule: https://www.tomsguide.com/ai/i-tried-the-3-word-rule-with-chatgpt-5-and-i-got-way-better-responses-7-prompts-to-try-now

Image Prompt Optimization: https://www.tomsguide.com/ai/chatgpt/how-to-use-chatgpt-5-to-level-up-your-image-prompts

Game-Changing Prompt: https://www.tomsguide.com/ai/this-ultimate-prompt-unlocks-chatgpt-5s-full-potential-and-its-surprisingly-simple

Where to Find It

Visit the OpenAI Developer Platform (i.e., go to platform.openai.com and log in).

Navigate to the Chat Playground (sometimes labeled as "Chat" or "Completions" Playground).

Once you paste your prompt into the input box, you should see an "Optimize" button nearby.

Clicking this will generate a refined, structured version of your prompt — complete with sections like Role, Task, Constraints, Output Format, and Checks.

Alternatively, some references suggest you can access the Prompt Optimizer directly via a URL like: platform.openai.com/chat/edit?models=gpt-5&optimize=true

What It Does

When you hit "Optimize," the tool:

Cleans up contradictions (e.g., conflicting instructions like "be brief" + "explain every detail")

Structures the prompt into clear categories—role, tasks, constraints, deliverables, etc.

Offers a built-in A/B comparison mode, so you can test the original versus optimized prompts side by side.

To use:

Paste your prompt

Click Optimize

Review the refined, structured version generated by the tool

Optional direct link pattern: ...?models=gpt-5&optimize=true

Platform Comparison

Managing Inputs for Prompt Rewriter and Generative AI Tools

1) Where JSON/XML shows up (and where it doesn’t)

• UI-only / browser extensions (AIPRM, FlowGPT, MidJourney, KREA)

• Inputs managed via forms or commands (e.g., --ar, --stylize)

• REST/SDK APIs (Stable Diffusion, Runway, Replicate, OpenAI)

• Inputs as JSON payloads

• Node/graph runtimes (ComfyUI, InvokeAI)

• Graph JSON with nodes/edges/params

• XML is rare; JSON dominates.

2) Typical JSON schemas and how to manage inputs

A) Prompt Rewriter stage

{ "task": "rewrite_prompt", "platform": "image|video",

"target_model": "midjourney|sdxl|runway", "style_guide": { "level_of_detail": "high", "tone": "cinematic" },

"input": { "idea": "lady justice...", "constraints": ["no text"], "negatives": ["blurry"] }

}

B) Image generation (Stable Diffusion)

{ "prompt": "epic cinematic portrait...", "negative_prompt": "blurry, extra fingers",

"width": 1024, "height": 1024,

"steps": 30, "cfg_scale": 6.5,

"seed": 123456789

}

C) Video generation (Runway / Pika / Kling)

{

"prompt": "Lady Justice on marble steps...",

"duration": 5,

"fps": 24,

"resolution": "1080p",

"camera": { "movement": "low-angle sweep" },

"motion": { "wind": "gentle" }

}

D) Graph workflows (ComfyUI)

{

"nodes": { "clip_text": { "type": "CLIPTextEncode", "inputs": { "text": "epic cinematic..." } }, "sampler": { "type": "KSampler", "inputs": { "steps": 28, "cfg": 7.0, "seed": 123 } }

},

"wires": [ ["clip_text.output", "sampler.cond"] ]

}

3) Managing inputs across platforms

1. Define a neutral schema (idea, subject, setting, mood, lighting, camera, detail_level, negatives).

2. Map to each target:

- MidJourney: string with flags (--ar, --stylize, --chaos) - Stable Diffusion: JSON fields (prompt, steps, cfg, seed)

- Runway: JSON with verbs for camera/motion - ComfyUI: CLIPTextEncode text field

3. Guardrails: JSON Schema validation, vocab lists, prompt length caps

4. Observability: log prompt, negatives, seed, model version

5. A/B testing with prompt variants.

4) Example end-to-end neutral brief

{

"idea": "Lady Justice in storm", "subject": "blindfolded Lady Justice with sword and scales",

"setting": "courthouse steps, storm clouds", "mood": "epic",

"lighting": "dramatic rays", "camera": "low-angle push-in",

"detail_level": "ultra-realistic", "negatives": ["blurry", "extra limbs"]

}

5) Input management tips

- Preset packs (Studio Portrait, Cinematic, Anime)

- Negative prompt libraries

- Seed strategies for reproducibility

- Prompt length discipline

- Version everything (prompt, model hash, sampler)